Prevent Gen AI Hallucinations From Ruining Your Business

If your business is using artificial intelligence (AI), whether for basic applications or at scale, you most likely have encountered instances where it makes mistakes or produces results that raise questions about its reliability. It doesn't matter what form of AI you use—traditional AI or generative AI—there is always a risk of errors. However, with generative AI, this risk takes on a new form: hallucinations (outputs that seem plausible but are entirely fabricated or false). These hallucinations not only lead to misguided business decisions but undermine trust in AI systems.

So, what are AI hallucinations exactly? How do they occur? And most importantly, how can you prevent them from happening? Here's everything you need to know about AI hallucinations and how to avoid them.

What Are Gen AI Hallucinations?

Image source: macrovector

Generative AI (Gen AI) hallucinations refer to the incorrect, nonsensical, or surreal outputs that are generated by large language models (LLMs), which are what allow generative AI tools and chatbots (e.g. ChatGPT) to process language in a human-like manner. These outputs may seem grammatically correct and coherent, but they lack any basis in reality and may not even address the original prompt or question. In other words, Gen AI hallucinations are when the AI model produces an output that deviates from factual accuracy or the intended purpose of the request. These hallucinations can range from minor errors to completely misleading outputs. An example of an AI hallucination is AI incorrectly claiming that the telephone was invented by Thomas Edison when the correct answer is Alexander Graham Bell. Or in sales order processing, AI may invent product names or prices that don’t actually exist.

While the term "hallucination" is usually associated with human perception, it can be used in a metaphorical sense to describe these incorrect outputs from generative AI models. Similar to how humans can sometimes perceive things that don't actually exist, AI models can produce outputs that are not based on real data or patterns they've been trained on.

How Do AI Hallucinations Occur?

To understand why hallucinations occur in AI, it's crucial to grasp the core principles behind how LLMs work. These models are built on what's called a transformer architecture, which processes text (or tokens) and predicts the next token in a sequence based on probabilities derived from its training data. LLMs are trained on huge volumes of data to learn patterns and relationships between tokens (words or characters), but they lack the ability to understand the context or meaning behind that data. This lack of contextual understanding can lead to AI hallucinations, where the model produces a response that seems statistically plausible but is factually incorrect or misleading.

Aside from limited contextual understanding, there are several other factors that contribute to AI hallucinations. These include:

- Training Biases: AI models are only as good as the data they're trained on. If the training data is biased or skewed—whether intentionally or unintentionally—the AI model may learn inaccurate patterns, leading to false predictions or hallucinations.

- Poor Training: Another common reason for AI hallucinations is poor training. For AI models to perform well, they require high-quality training data that is relevant to your specific use case. If the training data is inadequate, outdated, or doesn't cover a broad range of scenarios, the model may produce outputs that are incomplete, irrelevant, or flat-out wrong.

- Overfitting and Underfitting: AI models can also hallucinate when they are overfitted or underfitted. Overfitting occurs when a model memorizes its training data too closely and fails to generalize well to new inputs. Underfitting, on the other hand, happens when the model doesn't learn enough from the data, leading to weak, nonsensical, or inexact responses.

Gen AI Hallucination Examples

Let's take a look at some Gen AI hallucination examples to illustrate how these errors can put businesses at risk:

- False positives: In sales order processing, an AI model may flag an order as high-priority due to a misinterpreted keyword, such as "urgent," even when the order doesn't require expedited handling. This could lead to unnecessary allocation of resources, increasing operational costs, and potentially delaying other orders that actually require urgent attention.

- Incorrect predictions: An AI model used to forecast sales orders might predict a surge in customer demand for a product based on past seasonal trends. However, if current market conditions have shifted, like changes in consumer preferences or economic slowdowns, the model's prediction may lead to overproduction or overstocking, resulting in financial losses for the business.

How To Prevent AI Hallucinations

Source: Freepik

Ok. Now we have a better understanding of AI hallucinations and what causes them, but the most important question is: how to prevent AI hallucinations from occurring in the first place? Well, here are some helpful tips that you can implement to minimize the risk of AI hallucinations:

Utilize Specific and Relevant Training Data

Generative models rely on input data to carry out their tasks, so it's crucial to ensure that the training data is of high quality, relevant, and specific to your use case. To prevent hallucinations, ensure that the data used to train the AI model is diverse, balanced, and properly structured. This will not only help mitigate output bias but also enable the AI model to better understand its tasks and produce more accurate results.

Define Clear Model Objectives

What do you want the AI model to achieve? In order to reduce hallucinations, it's crucial to define clear objectives for your AI model. This includes identifying the specific tasks that the model will be responsible for, as well as any limitations or constraints on its use. By setting these parameters upfront, you ensure that the AI operates within a defined scope and is less likely to produce erroneous results.

Create A Template for Your AI

When training a Gen AI model, it's important to provide it with a template or set of guidelines to follow. This template serves as a valuable guide to assist the model in making predictions. For example, if you are training an AI model to generate product descriptions, you can offer it with a template that outlines the key components of a product description, like features, benefits, and specifications. This will help the model generate more accurate and relevant descriptions, reducing the likelihood of hallucinations.

Incorporate Human Oversight

Human oversight plays a critical role in reducing AI hallucinations. While Gen AI is a powerful tool, it is not infallible. By involving humans in the process of reviewing and validating the AI outputs, you create a safety net that ensures errors are caught before they cause issues. A human reviewer can also bring in valuable context and domain knowledge that the AI might lack. This allows them to assess whether the AI's output is accurate, relevant, and aligned with the task at hand. Incorporating human oversight can help prevent AI hallucinations and improve the overall performance of your AI model.

Retrieval-Augmented Generation (RGA)

Another highly effective way to prevent AI hallucinations is Retrieval-Augmented Generation (RAG)—a technique designed to make generative AI models more accurate and trustworthy by integrating real-time, external knowledge sources into their response process. Now, here's the issue: LLMs don't actually "understand" facts—they generate text based on statistical patterns from their training data. While this makes them great at forming coherent responses, it also means they can confidently produce incorrect or deceptive information (also known as hallucinations).

RAG fixes this flaw by allowing LLMs to retrieve external data through targeted queries and information retrieval techniques before generating a response. Instead of solely relying on their pre-trained knowledge (which may be outdated or limited), RAG lets AI access structured, real-time data from reliable sources such as databases, industry reports, or proprietary business documents.

This retrieval-before-generation process drastically mitigates hallucinations because the AI is now grounded in verified, up-to-date information rather than relying only on its potentially flawed internal representations. It also enhances transparency and trust because businesses can trace exactly where AI is pulling its information from. By integrating RAG, companies can ensure their AI systems provide not just well-formed responses but fact-based, reliable insights. This leads to better decision-making, increased efficiency, and minimizes the risk of generating misleading or incorrect outputs.

What To Expect

Unlike traditional AI models, which are designed to follow a set of predetermined rules and instructions, Gen AI models think out of the box and produce outputs that are not limited by predefined rules. This ability to think creatively is like a double-edged sword – it can lead to groundbreaking innovations, but at the same time, it can also result in hallucinations. In simple words, hallucinations may occur in certain situations, particularly when a generative AI solution encounters ambiguous or incomplete data. While hallucinations aren't likely to disappear, their nature will evolve as companies develop more sophisticated methods to address them.

As Gen AI models continue to advance, they will be better equipped to recognize nuances in language, understand the intent and context of a user's request, make more accurate predictions, and handle complex tasks with greater precision. Plus, they'll also become more adept at handling different types of data (e.g. text, images, audio) and will be able to process and analyze it in a more intelligent way. All these improvements could help minimize or eliminate AI hallucinations in the future that we see today. While it's impossible to entirely eliminate the risk of hallucinations, businesses can take proactive steps to prevent and mitigate their impact.

By using high-quality training data, setting clear goals, providing templates, and incorporating human oversight, companies can ensure that their Gen AI models are producing accurate and relevant outputs that align with their intended objectives.

{{cta-block-blog}}

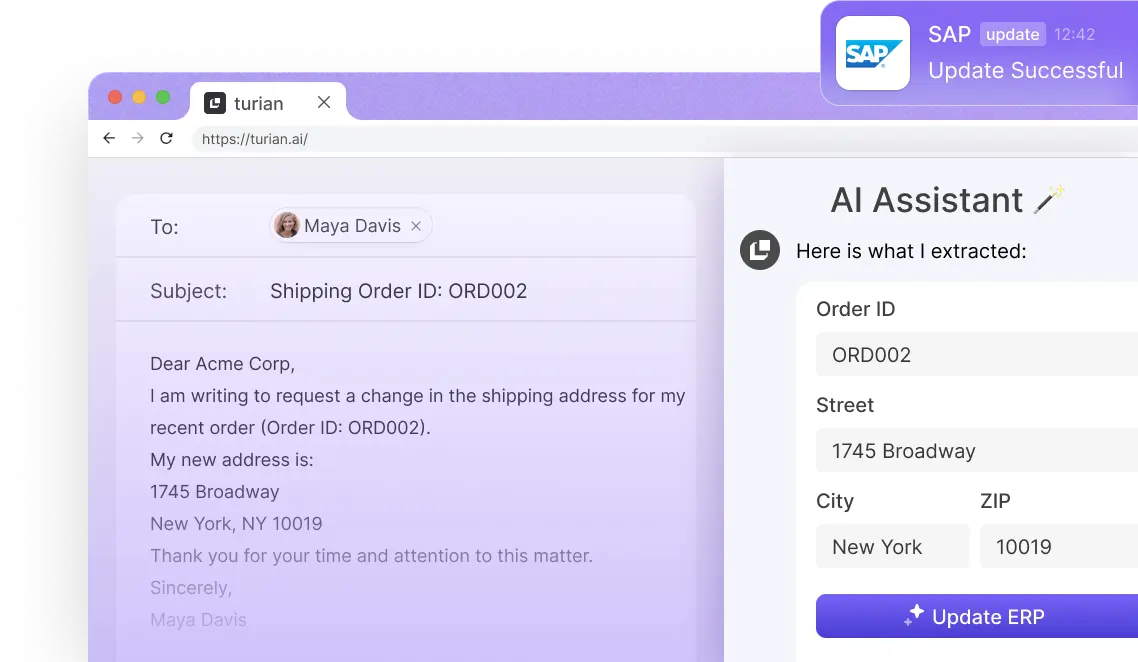

Say hi to your

AI Assistant!

Lernen Sie Ihren KI-Assistenten kennen!

.avif)

FAQ

Well, the short answer is yes. Even well-trained AI models can experience "hallucinations," which means producing inaccurate or misleading results. While these models are trained on vast amounts of data, they aren't immune to errors. Hallucinations can occur due to a wide range of factors like insufficient training data, poorly defined prompts, or inherent limitations in how AI models process and interpret information. AI models, no matter how sophisticated, rely on statistical patterns and probabilities to generate responses, rather than understanding the context or meaning behind the data. This means that there is always a possibility of generating incorrect or nonsensical outputs, even with a well-trained AI model.

Human oversight is crucial in reducing the likelihood of hallucinations in AI models. By involving humans in the process of reviewing and validating AI-generated outputs, errors can be caught before they cause any major issues. A human reviewer can also bring in valuable context and domain knowledge that the AI might lack, allowing them to assess whether the output is accurate, relevant, and aligned with the intended task. Plus, human oversight can help identify any potential biases in the AI model, ensuring that its outputs are fair and unbiased. Human oversight serves as a safety net and can significantly improve the performance and reliability of AI models.

At turian, we understand that hallucinations can be a significant concern for businesses using AI models. That's why we have implemented several mechanisms to prevent our AI solutions from giving entirely made-up or incorrect information. turian solutions also use Retrieval-Augmented Generation (RAG) technology, which fuses the strengths of both retrieval-based and generative methods to produce more accurate and contextually relevant outputs. Plus, we are using custom-built business rules and algorithms to ensure that our AI assistants provide high-quality and plausible outputs.

Well, it's impossible to completely eliminate the risk of AI hallucinations, but businesses can take proactive steps to minimize their impact. By using high-quality training data, setting clear goals, providing templates, and incorporating human oversight, companies can ensure that their AI models are producing accurate and relevant outputs that align with their intended objectives. Ensuring that AI models are trained on diverse and credible sources of data can also help reduce the risk of producing inaccurate content. By monitoring outputs for consistency and relevance, businesses can better ensure their AI provides correct answers to user queries.